- Home

- Features

- Fortnite

Fortnite has become a global phenomenon, and with Season 7 just kicking off there's no end in sight to the updates and tweaks. Fortnite takes a decidedly sillier approach to the battle royale genre, with a willingness to experiment, limited time game modes, holiday events, and more—not to mention plenty of aesthetic customizations that can be earned/purchased.

At this point, I'm not even sure why the Battle Royale mode is still labeled as Early Access—probably to stem complaints about bugs or something. Anyway, millions of people have played Fortnite BR and continue to do so, but while the somewhat cartoony graphics might lead you to think the game can run on potato hardware, that's not entirely true.

At the lowest settings, Fortnite can run on just about any PC built in the past five years. It's also available on mobile devices, which are generally far slower than even aging PCs. Officially, the minimum requirements for Fortnite are an Intel HD 4000 or better GPU and a 2.4GHz Core i3. The recommended hardware is quite a bit higher: GTX 660 or HD 7870, with a 2.8GHz or better Core i5. But what does that mean?

We've benchmarked the latest version, using the replay feature so that we can test the exact same sequence on each configuration. Full details of the settings and how much they affect (or don't affect) performance are below, but let's start with the features overview.

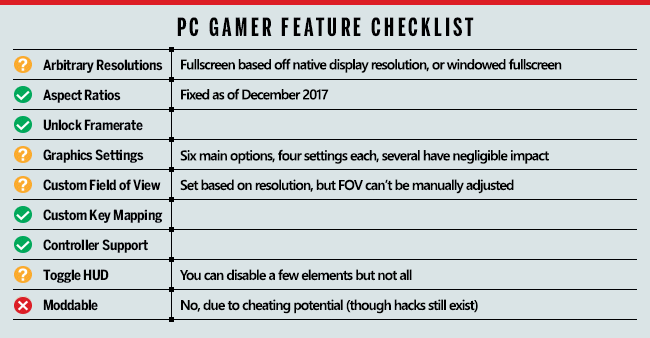

Starting with our features checklist, Fortnite had a bit of a rough start, but things have improved since launch. Previously, everyone was locked into the same FOV, with vertical cropping for widescreen and ultrawide resolutions. There's still a bit of odd behavior in that Fortnite only lists resolutions for your display's native aspect ratio if you're in fullscreen mode (meaning, on a standard 4K display you'll only see 16:9 resolutions), though you can get around this using Windowed Fullscreen mode in a pinch. FOV will adjust based on your resolution, but you're stuck with whatever FOV Epic has determined to be 'correct'—there's no FOV slider. That's better than when FOV was completely locked, though.

A word on our sponsor

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Fortnite on a bunch of different AMD and Nvidia GPUs and laptops—see below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

The number of settings to tweak is a bit limited, with only six primary settings plus motion blur, 3D resolution (scaling), and a framerate limit. Only three of these have more than a small impact, however. There's also no toggle HUD option for taking nice screenshots—you can turn off some of the HUD elements, but the compass, user name, and timer/players/kills counter always remain visible (at least as far as I could tell).

None of these are showstoppers, though fans of modding will be disappointed yet again with the lack of support. As is often the case these days, modding is currently out as it would potentially make it easier to create cheats/hacks—not that this hasn't happened anyway. Perhaps more critically (though Epic hasn't said this directly), mods would likely cut into the profitability of the item store. Why buy an outfit from the store if you could just create your own? Either way, modding isn't supported, and that's unlikely to change. You can build things in the Creative mode that might eventually end up showcased on The Block, but that's not really the same.

Image 1 of 6

Swipe for more charts and image quality comparisons.

Fine tuning Fortnite settings

The global Quality preset is the easiest place to start tuning performance, with four levels along with 'Auto,' which will attempt to choose the best options for your hardware. Note that most of the settings will also use screen scaling (3D Resolution) to render at a lower resolution and then scale that to your display resolution. For testing, I've always set this to 100 percent, so no scaling is taking place. I've run the benchmark sequence at the Epic preset, and compared performance with the other presets as well as with each individual setting at the minimum level. This was done with both the GTX 1060 6GB and RX 580 8GB, and performance impact is based off that.

Depending on your GPU, dropping from the Epic preset to High will boost performance by around 40 percent, the Medium preset can improve performance by about 140 percent, and the Low preset runs about 2.5 times faster than the Epic preset.

You can see screenshots of the four presets above for reference, but the Low preset basically turns off most extra effects and results in a rather flat looking environment. Medium adds a lot of additional effects, along with short-range shadows. High extends the range of shadows significantly, and then Epic… well, it looks mostly the same as High, perhaps with more accurate shadows (ie, ambient occlusion).

For those wanting to do some additional tuning, let's look at the individual settings, using Epic as the baseline and comparing performance with each setting at low/off.

View Distance: Extends the range for rendered objects as well as the quality of distant objects. Depending on your CPU, the overall impact on performance is relatively minimal, with framerates improving by 4-5 percent by dropping to minimum. I recommend you leave this at Epic if possible.

Shadows: This setting affects shadow mapping and is easily the most taxing of all the settings. Going from Epic to Low improves performance by about 80 percent. For competitive reasons, you can turn this off for a sizeable improvement to framerates and potentially better visibility of enemies.

Anti-Aliasing: Unreal Engine 4 uses post-processing techniques to do AA, with the result being a minor hit to performance for most GPUs. Turning this from max to min only improves performance by around 3 percent.

Textures: Provided you have sufficient VRAM (2GB for up to high, 4GB or more for Epic), this only has small effect on performance. Dropping to low on the test GPUs only increases framerates by 1-2 percent.

Effects: Among other things, this setting affects ambient occlusion (a form of detail shadowing), some of the water effects, and whatever shader calculations are used to make the 'cloud shadows' on the landscape. It may also relate to things like explosions and other visual extras. Dropping to low improves performance by 15 percent, which can be beneficial for competitive players.

Post-Processing: Among other elements this seems to include contrast/brightness scaling and dynamic range calculations. This controls various other post-processing effects (outside of AA), and can cause a fairly large drop in performance. Turning it to low improves framerates by about 15 percent.

Motion Blur: This is off by default, and I suggest leaving it that way. Motion blur can make it more difficult to spot enemies, and LCDs create a bit of motion blur on their own. If you want to enable this, it causes a 4-5 percent drop in framerates.

MSI provided all the hardware for this testing, consisting mostly of its Gaming/Gaming X graphics cards. These cards are designed to be fast but quiet, though the RX Vega cards are reference models and the RX 560 is an Aero model.

My main test system uses MSI's Z370 Gaming Pro Carbon AC (opens in new tab) with a Core i7-8700K (opens in new tab) as the primary processor, and 16GB of DDR4-3200 CL14 memory from G.Skill (opens in new tab). I also tested performance with Ryzen processors on MSI's X370 Gaming Pro Carbon (opens in new tab). The game is run from a Samsung 850 Pro SSD for all desktop GPUs. If you need more info, here are our best graphics cards right now.

MSI also provided three of its gaming notebooks for testing, the GS63VR with GTX 1060 6GB, GE63VR with GTX 1070, and GT73VR with GTX 1080. The GS63VR has a 4Kp60 display, the GE63VR has a 1080p120 G-Sync display, and the GT73VR has a 1080p120 G-Sync display. For the laptops, I installed the game to the secondary HDD storage.

Fortnite benchmarks

For the benchmarks, I've used Fortnite's replay feature using the viewpoint of the (significantly better than me) player that blew my head off. The replay does appear to stutter compared to regular gameplay, but this is not reflected in the framerates. Basically, the replay mode samples at a much lower tick rate and updates to position occur at that tickrate. I did test regular gameplay as well and found the results are comparable. Obviously, other areas of the map may perform better or worse, but Tilted Towers is a popular location and that's what I've selected for the benchmarks.

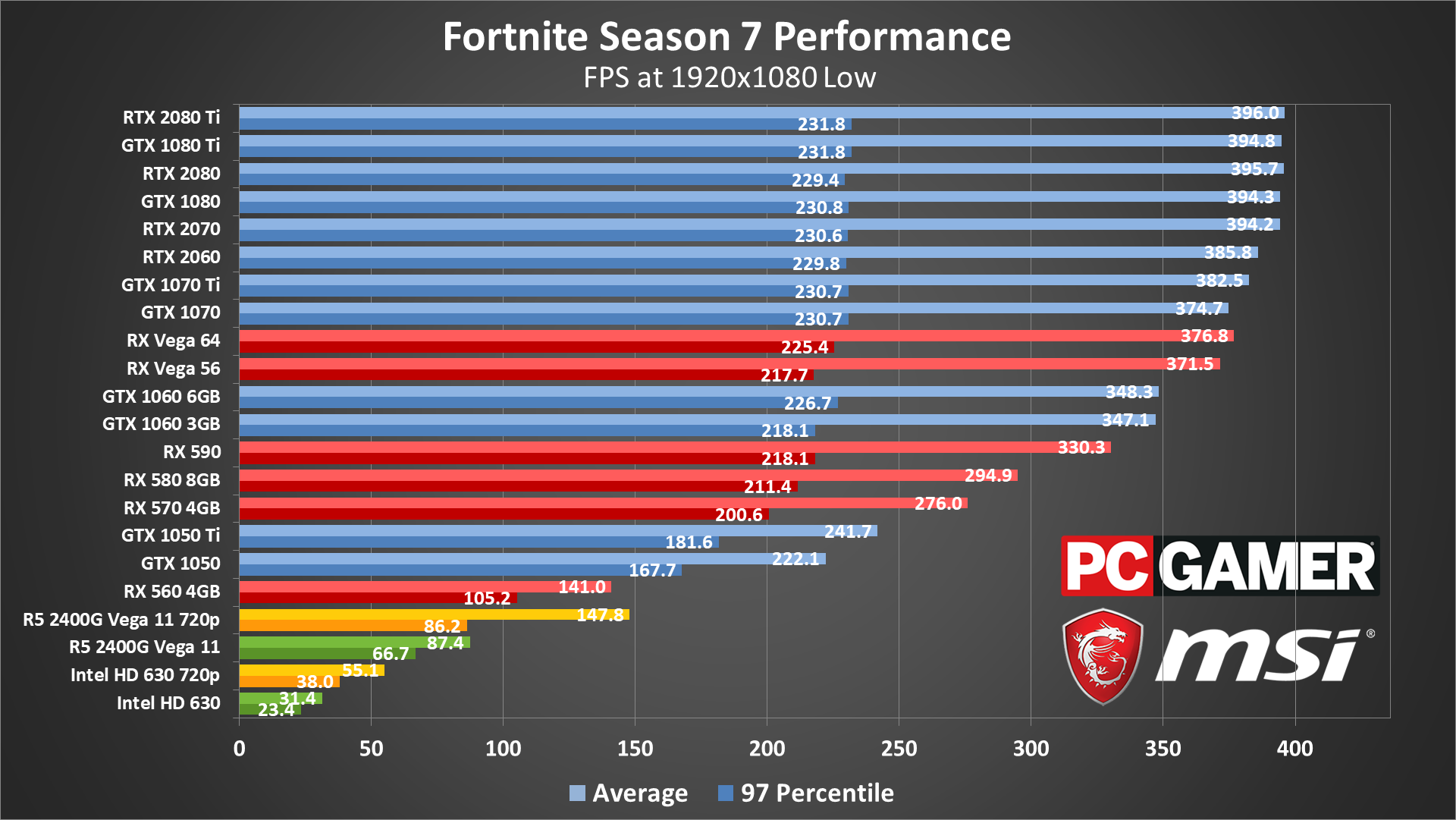

I've tested at five settings for each GPU: 1080p low, medium, and epic, plus 1440p and 4k at epic. I also tested integrated Intel and AMD graphics at 720p with the Low preset. Some players will choose to run at minimum graphics quality, except for the view distance, as it can potentially make it easier to spot opponents, and at those settings Fortnite is playable on most PCs.

At minimum quality, the fastest GPUs appear to be hitting a CPU bottleneck of around 400 fps, give or take. Minimum fps is around 230 fps, so if you have a 240Hz display you can make full use of it. All of the dedicated GPUs average far more than 60fps, with even the RX 560 4GB hitting 141fps. Even the Vega 11 integrated graphics breaks 60fps at 1080p.

For Intel graphics, 1080p on the modern HD Graphics 630 is mostly playable, and dropping to 720p provides a reasonably smooth experience. It's not 60fps smooth, and older Intel GPUs like the HD 4000 are quite a bit slower, but it's at least viable.

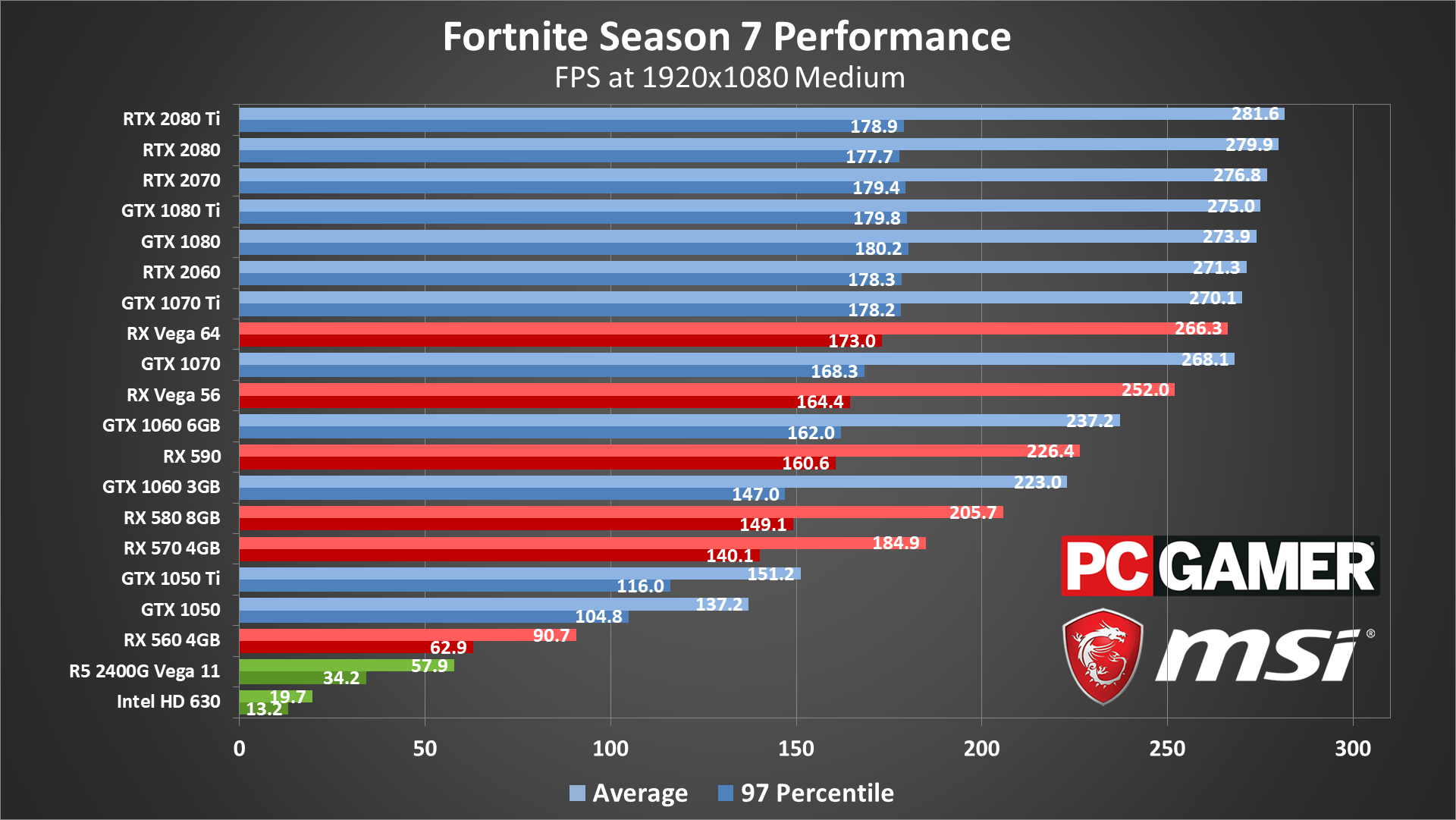

At 1080p medium, the faster GPUs still hit a performance limit of around 270-280 fps, with minimums far above 144fps. Nvidia GPUs have a clear performance advantage, though it's not really a problem as all of the framerates are quite high. If you're trying to stay above 144fps minimums, however, you'd want a GTX 1060 or better.

For budget cards where you'd want to run 1080p medium, the 1050 and 1050 Ti are vastly superior to the RX 560. The GTX 1060 cards also surpass the RX 570 and 580, though the RX 590 does manage to beat at least the GTX 1060 3GB. But if we're looking strictly at acceptable performance, all the GPUs still break 60fps.

For integrated graphics, Intel's HD Graphics 630 only gets 20fps now, while AMD's Vega 11 still averages nearly 60fps, but with minimums closer to 30fps. That GPU is about 20-30 percent faster than the HD Graphics 4600 found in 4th gen CPUs, which in turn is 10-20 percent faster than an HD 4000.

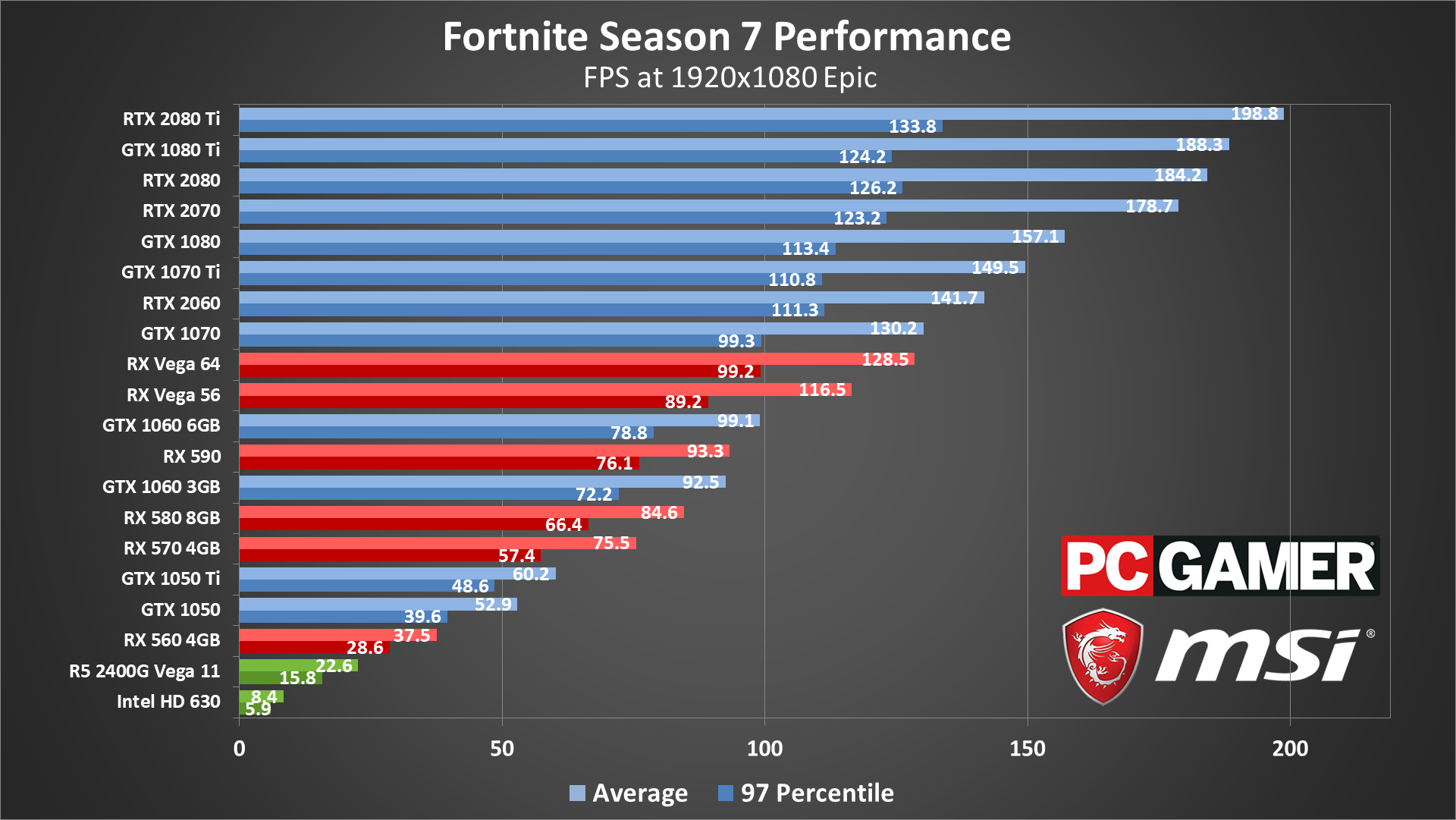

1080p epic quality cuts performance by more than half on most of the GPUs, though the fastest cards may still be hitting other bottlenecks. The RX 570 4GB and above continue to break 60fps, while the budget cards generally fall in the 40-60 fps range. For 144Hz displays, you'd want a GTX 1070 Ti or better.

Nvidia GPUs continue to outperform the AMD offerings, with the GTX 1070 beating even the RX Vega 64. That's better than how things looked earlier this year, but overall the Unreal Engine 4 games tend to favor Team Green.

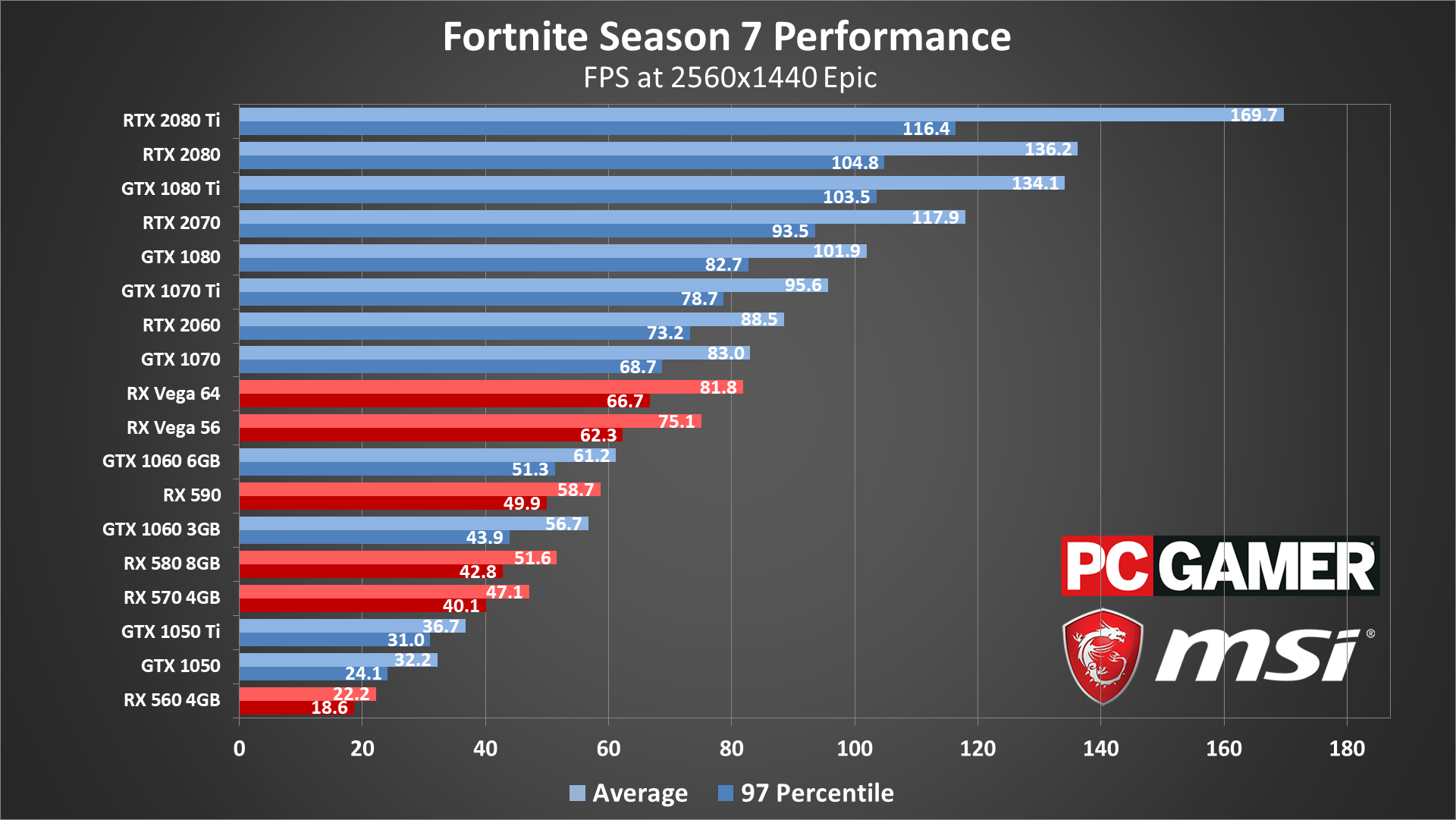

1440p epic starts to favor Nvidia's newer RTX cards a bit more, likely thanks to the additional bandwidth of GDDR6. Overall, the Vega 56 and above continue to break 60fps, while only the RTX 2080 Ti can break 144fps.

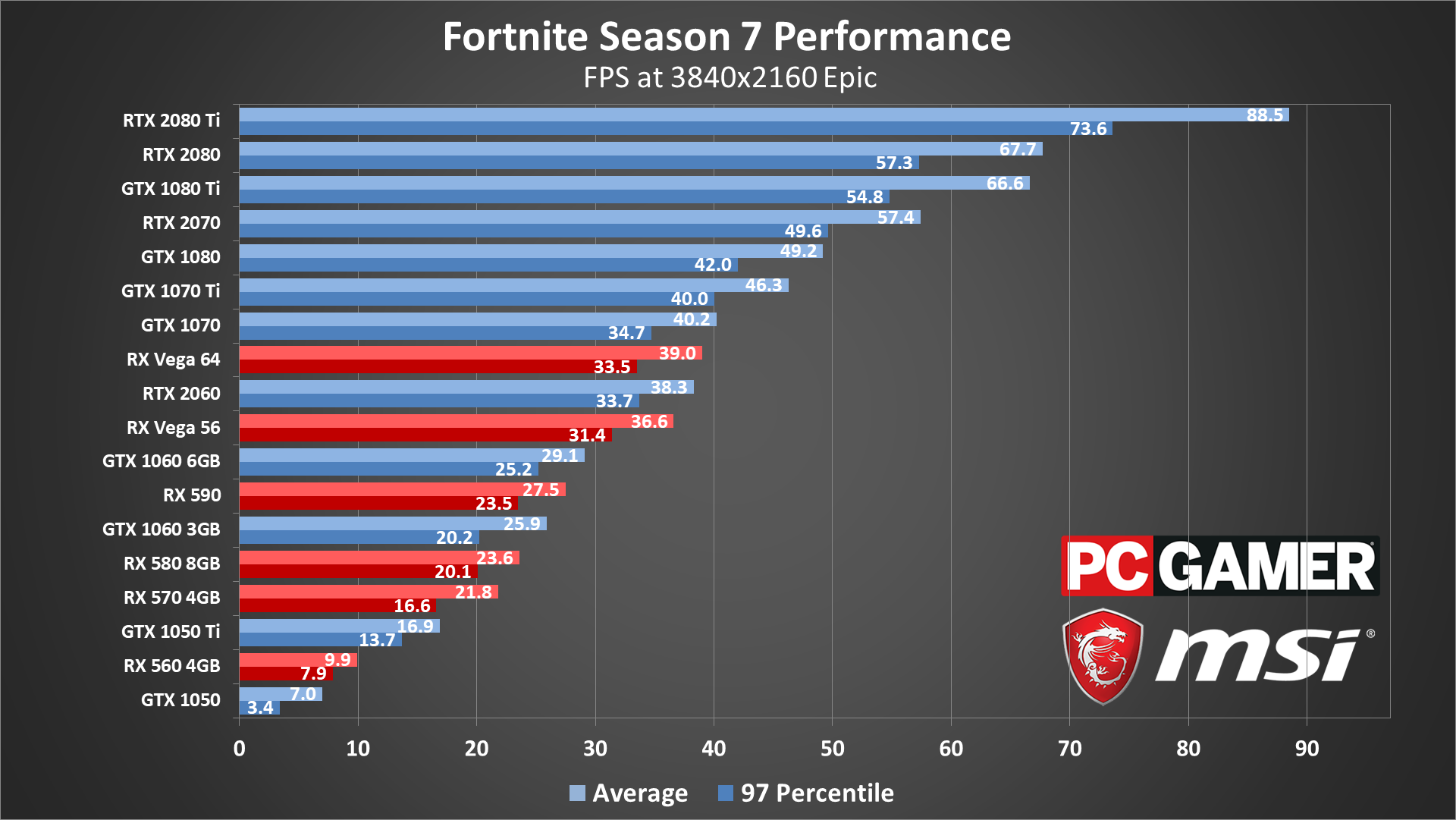

What does it take to push more than 60fps at 4k epic? The GTX 1080 Ti, RTX 2080, and RTX 2080 Ti all average more than 60fps, though only the 2080 Ti maintains minimum fps above 60. Otherwise the rankings remains about the same as at 1440p, with about half the performance.

Fortnite CPU performance

I've done CPU testing with several of Intel's latest i3, i5, and i7 parts, and a couple of AMD's second generation Ryzen parts. All of these benchmarks used the RTX 2080, which is mostly similar to the GTX 1080 Ti in performance. This is to emphasize CPU differences. If you're running a mainstream GPU like a GTX 1060, performance would be far closer for any of these CPUs. Since the initial launch, Fortnite has also seen improvements in multi-threaded performance, which helps most CPUs hit more than playable framerates.

Image 1 of 5

Swipe left/right for more charts

Intel's CPUs lead at nearly all resolutions, with the i5-8400 beating any of AMD's Ryzen processors. Even the i3-8100 comes relatively close to the Ryzen processors, and at 1440p and 4k it even takes the lead, though 4k is effectively a 6-way tie.

Fortnite notebook performance

Image 1 of 3

Swipe left/right for more charts

Wrapping up the benchmarks, I've only run 1080p tests on the notebooks, since two of them have 1080p displays. Needless to say, with lower CPU clockspeeds and fewer CPU cores, the desktop GPUs easily outperform the notebook cards at 1080p low and medium. 1080p Epic finally sees the GT73VR pass the GTX 1060, but the CPU is still holding it back. Given the multi-threading support of the current Fortnite release, the 6-core 8th Gen Intel CPUs should do quite a bit better.

Fortnite closing thoughts

Performance Analysis Hardware

Benchmarking Fortnite can be a bit problematic thanks to the randomized Battle Bus starting path, player movements, and more. Thankfully, the replay feature helps minimize variability, making the results of this second batch of testing far more useful.

Thanks again to MSI for providing the hardware. All the updated testing was done with the latest Nvidia and AMD drivers at the time of publication, Nvidia 417.35 and AMD 18.12.3. Nvidia's GPUs are currently the better choice for Fortnite, though if you have an AMD card you don't need to worry—they'll generally break 60fps at the appropriate settings just as well.

These updated test results were collected in late December 2018. Given the continued popularity of Fortnite, we may revisit performance again with a future update, especially if the engine or performance change again. These results might be a snapshot in time rather than the final word on Fortnite performance, but with the right hardware you can run any reasonable settings at high framerates. And if you're hoping to climb the competitive ladder, just drop everything but view distance to low and work on honing your skills.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

0 Comments:

Post a Comment